The past year has proven to be quite tumultuous for the autonomous driving industry. Shortening runways and pivots to more commercially viable ADAS seem to have become the norm. Notwithstanding, leaders at Mobileye recently laid out a path for its pursuit of consumer-level autonomy, which they believe is attainable in the near future. This new approach, which was presented at CES 2023, centers around a different way of talking and thinking about consumer AVs, which unlike the engineer-driven SAE J3016, focuses on simplified consumer-facing automation taxonomy. By laying out a new consumer-oriented classification system, Mobileye hopes to bring more attention to the real benefits of autonomy in terms of safety, convenience and efficiency.

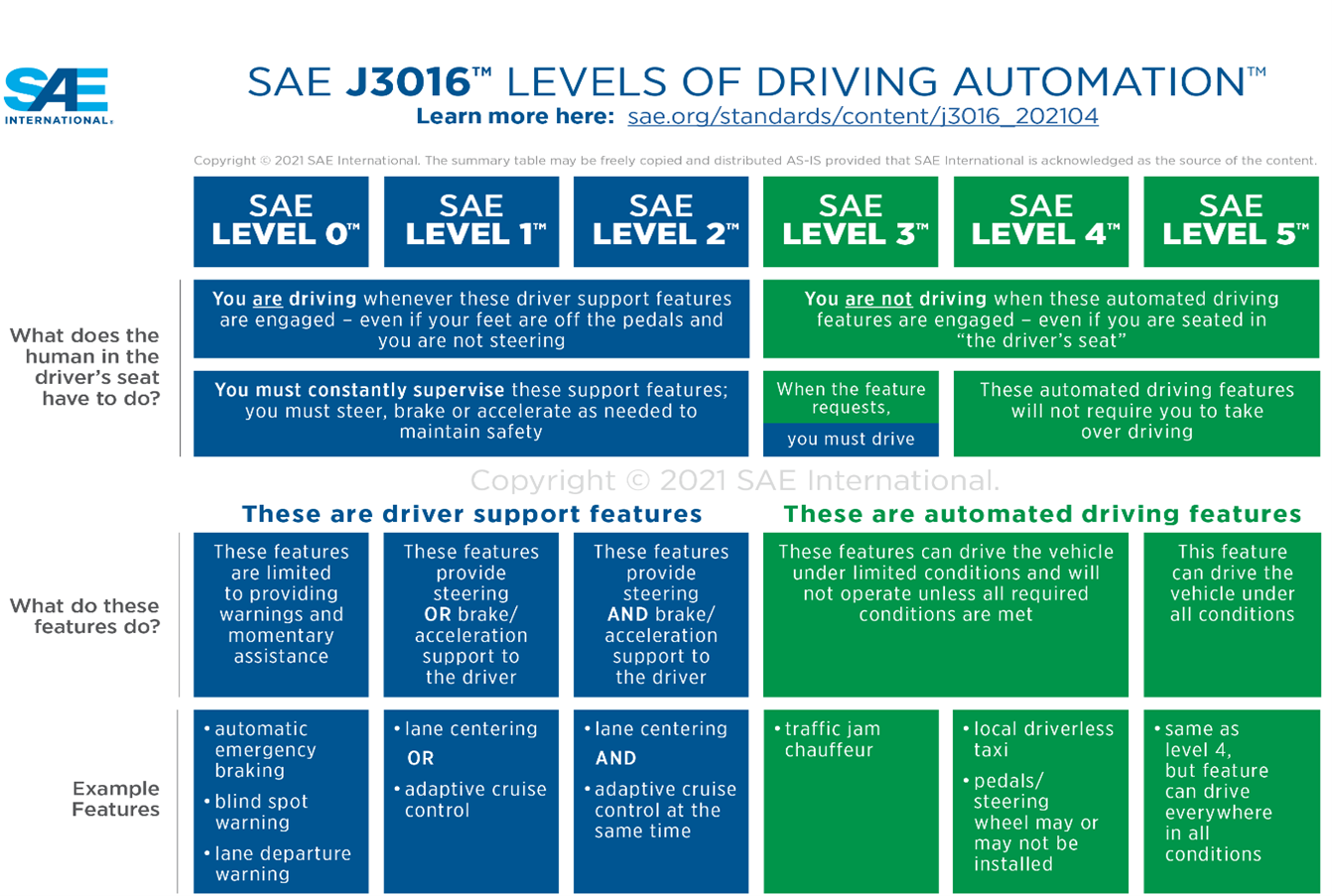

By way of quick background, SAE J3016 was drafted by engineers and for engineers in 2014. Today, the following rather complex descriptions of six levels of automation are widely accepted as the industry standard for AV development and regulation:

A plain reading of the above definitions evince that the current Level 0-5 taxonomy is somewhat vague and often misleading from an end-user perspective. For example, the SAE classification system generally suggests some sort of hierarchical ranking, with the higher levels being the most advanced and desirable, which really is not the case. Upon closer examination, the higher levels are not aimed at rating or grading the system’s qualitative level of automation, but rather they are actually only describing the level of shared driving responsibility between the human driver and the automated driving system. This misleading level system has unintentionally led to a “race” to the highest levels, which has resulted in a massive misallocation of capital and resources into “moonshot” full driverless technology rather than incremental advancement in achievable driver safety technology.

Another significant flaw of the Level 0-5 taxonomy is the severely, perhaps even dangerously, confusing Level 3 description. According to J3016, Level 3 is classified as an automated driving system, which means that the person in the driver’s seat is not actually driving and does not need to be paying attention at all times, a somewhat nebulous concept the SAE refers to as “Conditional Driving Automation.” At the same time, however, the driver somewhat counterintuitively needs to be ready to take over the driving task while purportedly relaxing whenever the system disengages. Thus, the paradox of the driver being unaware during system operation, while at the same time being fully aware if the system decides to hand off operation, is created.

Unanswered questions for Level 3 systems abound. For example, how much time should reasonably elapse between a “request” by the system for a driver to take over and the inattentive driver actually taking control of the vehicle? What about edge cases where the driver is asleep or otherwise incapacitated? What if an emergency situation arises and the inattentive driver needs to immediately take control of the vehicle? It seems to defy logic that you can tell a human they are not driving and do not need to pay attention while simultaneously relying upon them to take over in an unspecified period of time should the need arise. In fact, this contradictory expectation seems like a true recipe for autonomous disaster.

To combat the confusion surrounding SAE J3016, Mobileye has proposed simplified language that defines the levels of autonomy based on four easily understandable categories. As reflected in the below chart, this simplified differentiation concisely covers the entire automated driving spectrum:

(1) Eyes-on/Hands-on: this category covers situations where the human is responsible for the entire driving task and the automated system is monitoring and taking action in rare circumstances. In this category of autonomy, the system is supervising the driver and intervening only when necessary to avoid an accident, as distinguished from a system that continuously interferes with the human driver. Examples include basic ADAS, such as Autonomous Emergency Braking (AEB) and Lane Keep Assist (LKA). Under SAE taxonomy, this category would be classified as Level 1 or Level 2 systems.

(2) Eyes-on/Hands-off: this category encompasses more advanced ADAS where the driver’s hands can be off the steering wheel (Hands-off) while the system is driving but must still supervise the system at all times (Eyes-on) within a specified Operational Design Domain (ODD). This category of automation aims to achieve a synergetic reaction between the human and the system by optimizing what they each do best. For example, automated systems perform best in static or mundane driving conditions where human failures are more common due to fatigue, distraction, or boredom. Conversely, human drivers perform best in challenging environments that require them to concentrate more intently on their surroundings where automated system failures are more common due to complicated “edge case” scenarios. Thus, these respective failure modes actually compliment each other and by utilizing a reliable driver monitoring system (DMS), this synergetic relationship can be used to increase the overall safety of driving. Since this category is absent from SAE taxonomy, it is sometimes wrongly perceived by consumers as Level 3 or 4 rather than a true Level 2 system, i.e., Tesla’s FSD Beta.

(3) Eyes-off/Hands-off: this category describes truly autonomous systems that completely control the driving function within a specified ODD without any human driver supervision. The system can handle all driving responsibilities within the ODD and this is clear from the consumer perspective. Once the vehicle reaches the end of its ODD, the system initiates a takeover command and the driving responsibility is transitioned to the human driver. In the event the transition does not occur, the system initiates a minimal risk maneuver (MRM), so that the vehicle can come to a safe stop in a designated location. Pursuant to SAE taxonomy, this category would be classified as either Level 3 or Level 4 depending on the ODD and takeover sequence. Of note, an Eyes-off/Hands-off system still requires the vehicle to have a human in the driver’s seat for non-safety-related situations.

(4) No Driver: this category is reserved for AVs, such as Robotaxis, that do not require the presence of a human driver. When no driver is present, a teleoperator is utilized for resolution of non-safety related situations.

The above simplified approach proposed by Mobileye clearly outlines the parameters of the shared driving experience, thereby alleviating the ambiguities from the end-user perspective inherent to the SAE taxonomy. In short, the human driver is either (1) being supervised by the system, (2) supervising the system, or (3) not a part of the driving experience in prescribed ODDs.

Employing this simplified framework, Mobileye also keenly outlined an evolutionary path to commercially viable full Eyes-off/Hands-off technology through the incremental development of what it refers to as “autonomous blades.” Mobileye’s path to full autonomy starts with an Eyes-on/Hands-off autonomous blade in a defined ODD that gathers data to evaluate performance. Once the system is validated, it can be safely and easily transitioned to an Eyes-off/Hands-off autonomous blade within the same prescribed ODD. As redundant sensor set technology advances, this process can be repeated incrementally to an ever-expanding stack of ODDs, starting with highways and off ramps and then gradually increasing to arterial roads, then to complex urban and rural environments, and finally to autonomy everywhere.

Companies like Mobileye claim to already have the technological backbone needed to enable Eyes-on/Hands-off driving in the initial highway ODD, such that the transition to an Eyes-off/Hands-off blade would simply require the collection of data to verify acceptable end-to-end failure rates. Once accomplished, the Eyes-on/Hands-off blade would again be used with improved sensor sets to expand the ODD to the next stage, i.e., arterial roads, and the entire process would simply wash, rinse and repeat until full autonomy is reached. Utilizing this model, the redundancies to the perception system are the only advancements needed to make incremental leaps from Eyes-on to Eyes-off blades.

In theory, Mobileye’s path to autonomy sounds very compelling and attainable. In reality, however, the success of this formula will likely hinge on OEMs’ appetite for the ongoing significant financial investment needed to validate each autonomous blade as it transitions from Eyes-on to Eyes-off. To date, only Tesla has been able to convince, or as critics say trick, its customers into funding the validation stage of its Eyes-on FSD system while it collects data and attempts to transition to an Eyes-off system. Regardless of the eventual outcome, Mobileye certainly seems to have moved the needle towards achieving commercial viability by stripping away the esoteric shell surrounding AV technology.

Copyright Nelson Niehaus LLC

The opinions expressed in this blog are those of the author(s) and do not necessarily reflect the views of the Firm, its clients, or any of its or their respective affiliates. This blog post is for general information purposes and is not intended to be and should not be taken as legal advice.